Should Evaluators Take a Step Back? Enabling Frontline Staff to Lead the Learning Process

May 30, 2022 | 6 Minute Read“We must learn!” is a cry commonly heard on international development programs, often resulting in ineffective quarterly “pause and reflects” that occasionally include evidence. In an increasingly complex world, the development community must do better. Prioritizing the observations of frontline staff is crucial to actually learning so that we can adapt and improve programming.

Facilitating workshops to support learning can feel like an obligation rather than a beneficial activity. The frontline staff experiencing change first-hand are often not at the center of reflective exercises, limiting their influence on decision-making, learning, and adaptation. This is a problem: interventions that do not incorporate learning from frontline staff will struggle to be effective.

The Iraq Durable Communities and Economic Opportunities (DCEO) project wanted to change that. Implementing an adapted version of the Hierarchical Card Sorting (HCS) method allowed us to design and conduct a learning experience that prioritized frontline staff. They were not just participants in the learning exercise, but co-creators and co-analyzers.

The value in our approach was clear. We observed that participatory learning was absorbed and translated into action more effectively. Critically, we showed that HCS can be adapted to support a variety of programmatic learning needs.

What is Hierarchical Card Sorting?

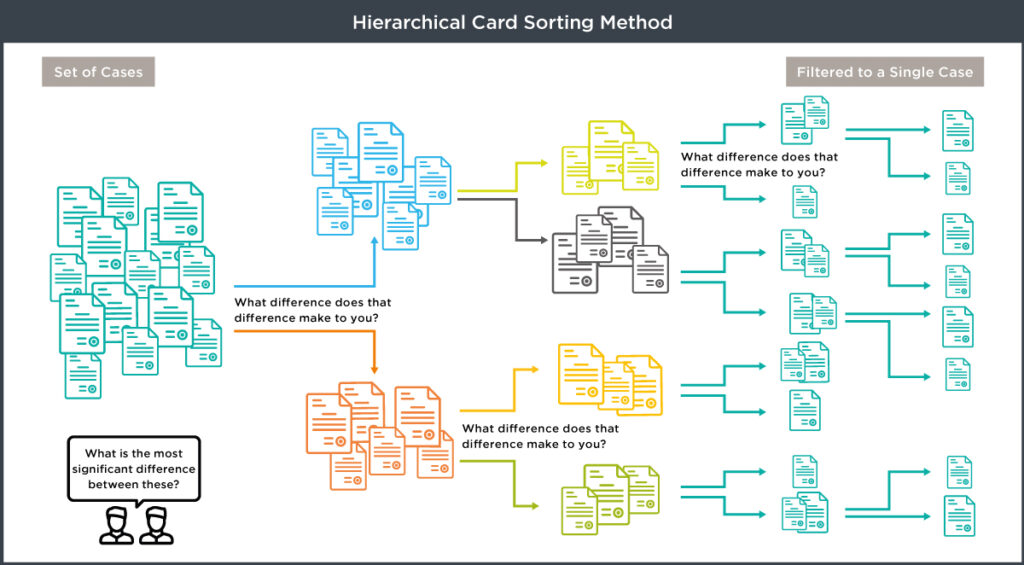

HCS is a participatory exercise that can be tailored to serve different purposes. It can be used to identify gaps in knowledge and experiences, evaluate differences, or for reflective learning. HCS works as follows: first, a group of “cases” is selected as the subject of the exercise. The cases can represent any relevant topic, like targeted stakeholders, project activities, targeted locations, or similar. Each case is written on a card and put into a single pile. Participants are then asked: “what is the most significant difference between these cases?”.

The cases are sorted into two piles representing the two sides of the chosen difference. Participants are then asked to explain why that difference was most important: “what difference does that difference make?”. Answers are recorded and these steps are repeated until each pile contains a single case or participants cannot identify any significant differences between cases in a pile. This creates a “tree” structure (see figure below) that prompts reflection on how the identified differences informed the program. The final step is a weighting exercise using binary questions to understand features about the cases (such as vulnerability of different stakeholders) and to rank them according to these features.

By asking staff to examine the reasons they treat cases differently, HCS exposes the mental models and biases brought to day-to-day work. This is especially valuable for programs like DCEO working with diverse identity groups. Critically, HCS understands that not all evidence can be reduced to numbers. Observational, often qualitative, evidence is often more useful for informing program adaptations, particularly when provided by frontline staff.

Do Not Fear the Redesign

Learning methods like HCS are most useful when tailored to a project’s learning needs and interpersonal dynamics. During DCEO we fought our inclination to rigorously follow the process outlined in Rick Davies’ HCS guide. Instead, we asked ourselves: what do we want to achieve with this exercise? Prioritizing our desired learning outcome allowed us to be creative and structure the HCS workshops to suit the project’s needs and the way our Community Cohesion Officers (CCOs) operate. CCOs support the project by facilitating community dialogues within and between various communities and identity groups in Iraq. When designing learning activities, we felt that mimicking this locally-led approach would empower our CCOs to own the learning process.

We made several adaptations, but the three most relevant were:

- We ensured that CCOs played an active role in designing the learning exercise. For example, CCOs selected the cases for sorting, with each case representing an identity group within the community they were working with. This engaged participants by ensuring the discussion was highly relevant to their work.

Similarly, during the workshop we asked for opinions on how certain questions were phrased. The standard guidance suggests that the selection of a “most significant difference” should be followed by the question: “what difference does that difference make?” However, in Arabic this question lacks clarity. Following the CCOs’ guidance, we rephrased it to “why does that difference matter to you and your work as a CCO?” This not only translated better, but also prompted participants to consider how differences could guide future engagements with Iraqi and Kurdish communities. - We turned HCS into a group exercise. Rather than a facilitator interviewing each CCO separately, we encouraged them to discuss amongst themselves and challenge each other regarding the most significant difference and why it mattered. We didn’t limit discussion, providing sufficient time to reach a collective answer. Discussion empowered each individual to challenge and analyze their own answers and biases rather than rely on an external consultant for the analysis. Overall, this resulted in a more dynamic experience that yielded deeper reflection for the staff involved.

- We held a learning and analysis workshop. We wanted our CCOs to not just participate, but to own their learning by analyzing the HCS findings themselves. We held an additional workshop after the initial exercise, bringing each group together to present their findings. They then compared findings and identified patterns. From the discussion, the CCOs identified a list of lessons learned, and, more importantly, a list of actions to guide their work going forward. They also produced a list of recommendations to share with project leadership.

Learning is most useful when participants feel part of it; we must workshop with not at people. At every step of the way we ensured the CCOs were not just involved, but leading. They selected the cases, co-defined the weighting questions, and analyzed their own learning and findings. This was essential on two counts.

Firstly, the co-design meant the workshop addressed the topics they felt were most important, reflecting the reality of their work. Secondly, the learning and analysis workshop supported the CCOs to process and reflect on their own learning. This meant that they could engage in deeper, more strategic conversations as a group and translate their learning into actionable steps, both for themselves and for their leadership. Critically, they did this themselves rather than it being done for them in isolation.

It can feel intimidating to adapt an established process, especially one created by someone as well-respected as Rick Davies. Never let this stop you! We frequently consulted with Rick and he encouraged us to explore the possibilities of HCS. Our aim was to put our frontline staff at the center of the process, which wouldn’t have been possible without adapting HCS the way we did.

What we Learned from Learning

Our experience of implementing HCS on the DCEO project was ultimately a fruitful one. We came away with several ideas we believe can be useful for international development organizations seeking to engage staff in learning exercises.

The first lesson is to put frontline staff at the center of learning structures, from participation to interpretation. Frontline staff should not only be participants in learning exercises like HCS (i.e. provide the data), but they should also be involved, or better yet, lead, the design of the exercise and the interpretation of findings. This is a fantastic way to cement learning and increase the likelihood it will be translated into real actions and changes.

The second lesson is that learning methods, even well-established ones like HCS, should never be set in stone. Complex contexts call for nuance; doggedly applying conventional methods won’t yield useful results. Adaptations can occur both before and during learning workshops, based on the reactions and needs of the participants. Similarly, never be afraid to break out of the “pause-and-reflect” box. Trying something new can be beneficial, even if it takes time to learn, adapt, and implement the new approach.

The final lesson is that evidence-based learning isn’t a numeric or quarterly function. Putting frontline staff at the center of learning works best when it complements the project’s structure. Usually, this means conducting exercises at appropriate junctures in a project’s timeline, rather than following a regular schedule. This has further benefit: it will be easier to implement learning that is broken down by project phase rather than by an arbitrary time period.

Sarah Ghattass is an independent strategy and results consultant, specializing in MEL, research and strategy development in the areas of public policy, global health, gender equality, and addressing drivers of instability and conflict. She has adapted participatory evaluation methods and created MEL systems for complex projects using actor-based change framework, stakeholder mapping, and behavioural change models. Sarah enjoys facilitating design thinking workshops, bringing together various stakeholders to develop theories of change, conduct in-depth analyses of problems, brainstorm solutions, and refine strategies and operating processes. She strives to build bridges between experts across sectors by creating learning opportunities to share knowledge and have candid conversations about the challenges within the development sector.

Sarah Ghattass is an independent strategy and results consultant, specializing in MEL, research and strategy development in the areas of public policy, global health, gender equality, and addressing drivers of instability and conflict. She has adapted participatory evaluation methods and created MEL systems for complex projects using actor-based change framework, stakeholder mapping, and behavioural change models. Sarah enjoys facilitating design thinking workshops, bringing together various stakeholders to develop theories of change, conduct in-depth analyses of problems, brainstorm solutions, and refine strategies and operating processes. She strives to build bridges between experts across sectors by creating learning opportunities to share knowledge and have candid conversations about the challenges within the development sector.

This blog was co-authored by Sarah and Chemonics’ Senior Monitoring Evaluating and Learning (MEL) and Research Specialist Niki Wood.

Banner photo caption: An Iraqi woman in a headscarf speaks into a microphone during a meeting.

Posts on the blog represent the views of the authors and do not necessarily represent the views of Chemonics.