Why Now Is the Time To “Pause and Reflect” on the Way We Approach Learning

April 4, 2023 | 6 Minute ReadDevelopment programmes often fall back on repetitive, tick box learning efforts. It is time to break this cycle and put people back at the centre of learning.

“New year, new me!” Who hasn’t fallen into the temptation to view the new year as a clean slate, a time to reflect on past habits and what this year can bring? We certainly tend to do this for our programmes, seeing the new financial year as a fresh start to deliver even more positive change than before.

However, do we ever truly learn from the previous year? Behavioural science, psychology, and related disciplines have much to say about mechanisms of human learning, and we are increasingly giving more attention to how we learn as individuals. Yet why do we not apply this to our programmes?

Programmes, at their heart, are designed and guided by people, and they engage people when they are delivered. Still, we often fail to acknowledge this critical factor with our programmatic learning. Instead, as we have discussed in an earlier post, we revert to extractive and time-consuming engagements; often dry, static, “pause and reflects” that feed quarterly reports and happen when no one has the time or impetus to engage…and frequently do not actually help us learn. We can, and should, do better.

Learning to learn

Learning happens for each of us in a variety of ways – it’s not always the same way each time, and it unfolds differently based on the context or circumstances. Simply put – it can be chaotic! Failure to acknowledge this in our programmes, as well as by donors, perpetuates the idea that learning is “one size fits all”. At the same time, we have all seen how challenging it can be to embrace the chaos. For example, it has taken time for the sector as a whole to welcome the need for adaptive programming in complex environments. So where do we start?

One approach is to focus just as much on how we’ve learned as what we learned, placing the people at the centre of our learning – the project staff, partners, the client – to the forefront. This can help us tailor learning approaches to their needs, break away from a step-by-step process, and not be afraid to adapt when things are not working. Ultimately, embracing the chaos!

Tailoring learning for the people at the centre of it

Tailoring learning, of course, is not straightforward. Yet even if it takes time to think through, it is worthwhile for the value of reflection that comes out of it – and it can be fun! In our experience, it comes down to a few key questions:

What do you need to learn about?

Why do you want to learn, and what purpose will it serve?

And, most importantly…

Who needs to learn?

These questions can guide you to find learning approaches that are useful but – critically – also accessible to those you are helping to learn. We have three examples of how we have applied this on programmes to demonstrate!

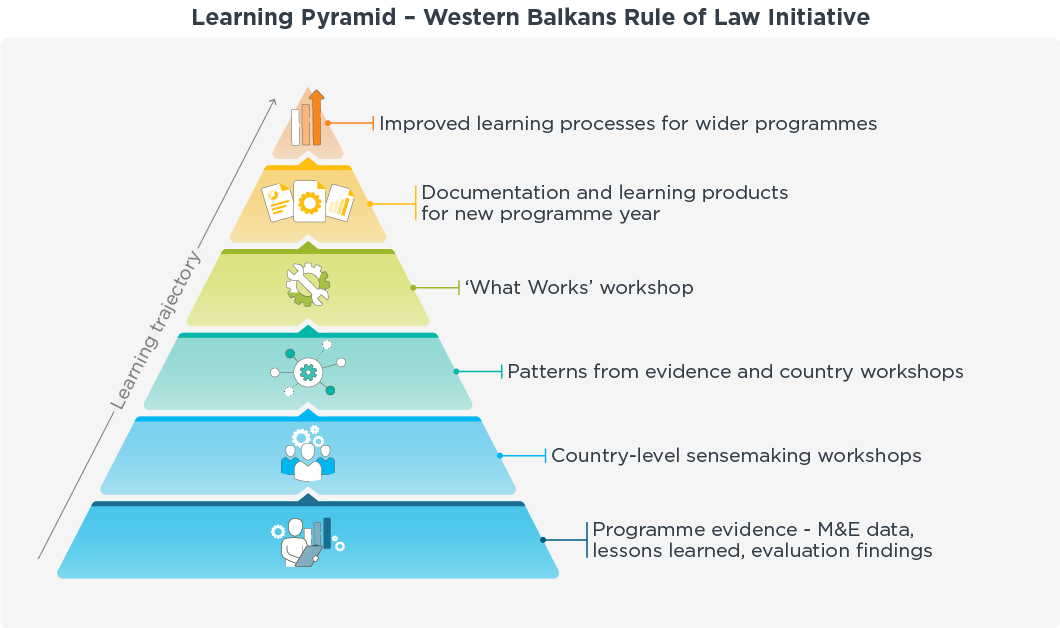

1. A Learning Pyramid

Our Conflict, Stability and Security Fund (CSSF) funded Western Balkans Rule of Law Initiative (WBROLI) works across five Balkan countries to support criminal justice and integrity institutions. The programme worships at the altar of Problem Driven Iterative Adaptation and Thinking and Working Politically, and so uses a blend of live evidence to identify windows of opportunity for small, catalytic, interventions that support partner institutions in pursuing their anti-corruption and justice goals. Learning continually emerges and feeds back into programme adaptation, but there is rarely a chance to step back and look at the bigger picture. We changed that.

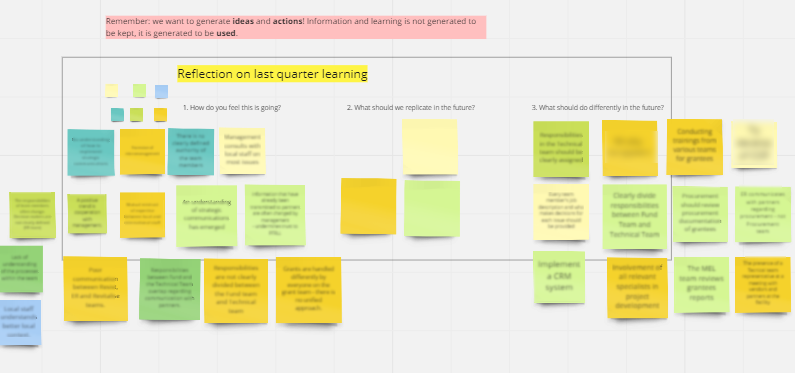

After eight months of programming, WBROLI needed to learn what was working and where consistent patterns lay in terms of in successes and failures. Why this was important was because it needed to consider strategic adaptations to its approach and expertise for the new financial year. The who, however, was interesting. A lean team run the programme and draw from pools of local and international experts. The team leader had managed to hire a very adaptive, dynamic group who in turn had replicated this in the expertise available. This meant a large pool of analytic and colourful colleagues who love to get stuck into evidence (many of whom share our deep affection for Post-Its and magic whiteboards), but without much time for a mammoth learning task. As a result, the WBROLI MEL Manager and Senior MEL Advisor worked with Becky Austin, and with her we built a learning pyramid over a period of three months.

Firstly, the base came from a synthesis of programme monitoring and evaluation data, analysis of lessons learned over the year, a literature review, and a mini evaluation. This yielded foundations of “what works” as well as other relevant learning. To build the next layer, we then held short, digital, country-specific sensemaking workshops that blended discussion and interactive exercises, helping teams and experts unpack the evidence in relation to their country theory of change and experience. This allowed for different learning preferences to be engaged, while being respectful of different time zones and availability, and the team’s love of being included.

The team then synthesized the emergent patterns from the evidence and sensemaking workshops to create the next layer of cross-cutting learning…and convened all 40+ team members and experts into a full day “What Works” workshop in Montenegro. The day blended exercises for both introverted and extroverted personality types and different learning styles (such as listening to Learning Journeys, building Learning Caterpillars and small group analytical work) and was highly fruitful: those attending demonstrably learned, and also generated new learning for the programme to consider. The results directly fed into the Programme Board documents for the new year and are informing range of learning products for different users.

This took time. Yet the results were much more fruitful than traditional approaches and helped reshape the programme strategy, opening eyes to what learning can achieve.

2. Tailoring approaches for different learning needs

On our multi-donor Partnership Fund for a Resilient Ukraine (PFRU), we found large differences in what needed to be learned, and why, depending on people’s role and learning style. In response, we created layers of learning approaches. For example, we use Miro-board based “fireside chats” with staff delivering projects on a day-to-day basis. This creates an informal way to reflectively learn and recognizes that most team members seem to prefer visual and written learning styles. These sessions are facilitated in Ukrainian by our fantastic MEL Manager, who gently guides teams to collectively reflect on what they have learned, and what they will do differently. With project leaders we have regular, semi-structured learning conversations. These are 1:1 and allow more focused reflection on what they have learned and what this might mean for the strategic direction of their work. They are often led by the MEL Director or MEL Manager in English or Ukrainian, with the goal to support leadership to identify technical learning, and clear actionable steps to incorporate this. As we are now one year into PFRU and new staff are joining to deliver new projects, we are re-reviewing our learning structures to ensure they are still relevant to the learning goals of the programme and the learning styles and needs of its staff.

3. Rich learning for the time poor

Our Cross-Border Conflict Evidence, Policy and Trends (XCEPT) is an incredibly fast-paced research programme. Beside a complex long-term research agenda, it often delivers time-sensitive research in response to emerging trends and issues across the globe. Standard learning and reflection sessions are an ineffective tool for a team that has scarce time but needs to remain agile to changing contexts. Understanding the nuances of the team, we piloted an asynchronous approach to learning. We embedded a tailored Results and Evidence Sheet (RES) tool that captures observational evidence and builds “learning chains” in real time. Once a change is observed, whether big or small, positive or negative, it is captured by programme staff, who continue to feed into the RES when further changes are seen. This documentation of learning in real time builds a chain of evidence to readily inform programme implementation and decision making, and to show impact over time. The tool is being iterated as the programme’s delivered but has already provided an approach to meaningfully capture and connect learning journeys, completely tailored to the needs of programme staff.

Conclusion

These examples all demonstrate the value of putting people at the centre of our learning. We need to give the same amount of thought to our programme’s learning as we do to our personal learning journeys. So, before you jump into the same old “pause and reflect” sessions, take a step back and think about a few things. What are your team’s dynamics, learning styles, and engagement styles? You might find that some teams respond better to lively and colourful brainstorming sessions, while others prefer 1:1 learning conversations to capture their experience. What does your programme need to do? After all, a programme responding to a moving frontline in a conflict will have very different learning needs to one building momentum to lobby their local government.

Don’t be afraid to break out of the “pause and reflect” box and get creative with your learning methods. You are not being irresponsible by trying something no one else has; you are making it more useful for the people engaging in it. Even if you are already three years into a five year programme, there’s always room to adapt and improve your learning process. You need to learn, not follow steps – and embrace the very human nature of learning!

Posts on the blog represent the views of the authors and do not necessarily represent the views of Chemonics.